Can GPT-4 *Actually* Write Code?

I test GPT 4's code-writing capabilities with some actual real world problems.

Since ChatGPT came out I’ve seen quite a lot of people posting about its capability to write code. People have posted about how they had it design and implement a number puzzle game (without realizing that that game it “invented” already exists), how they’ve had it clone pong, and hell I’ve even used it to write a few simple python utility scripts. It’s very capable, and a quite useful tool.

But, there is a commonality in all of these examples people have been posting. They are all problems that have been solved before, or extremely minor modifications to those problems. And while, to be fair, a *lot* of programming is literally just that- gluing together existing solutions and fitting existing code into your specific use case, the *hard* part of programming is solving problems that haven’t been solved before.

So I decided to test it on a particularly difficult “algorithmic” problem I solved a couple years ago. Something small and isolated enough that I could fit it into a ChatGPT prompt, but with enough subtleties that I feel like it would have trouble getting it right.

THE PROBLEM

Let’s start with a description of the actual real-use-case problem. In Mewgenics, movement abilities use pathfinding to get the cat from his origin to his destination.

Cats have a maximum movement range stat (in this case it’s 6) and tiles have a cost (in this case its 1 for basic tiles and 9999 for blocking obstacles). We also have water tiles that cost 2 to path through.

So far, nothing I’ve described is out of the ordinary for grid based pathfinding. Both Dijkstra’s Algorithm and A* can handle tiles with different costs just fine and its trivial to just cut off the path after a certain distance to deal with the maximum movement range.

The complication comes in when we add Fire (and other hazard type tiles) into the mix. Fire tiles don’t cost extra to pathfind through like water tiles do, however a cat really wants to avoid pathing through the fire tile if it can. Shown here is the working solution to that. There’s a lot of more complicated cases as well, if there’s a lot of fire tiles it should go through the least number of them it can and such, and that’s all working well.

Now, its not completely obvious why this is a difficult problem. Or at least, why the solution is more complicated than simply A* with a modified heuristic that considers “desire costs” like fire. It’s a problem that is very subtly different to the standard A* pathfinding, and the solution, while very very close to A*, has a few non-intuitive changes that mattered a lot. This was a “hard” problem, and back in 2020 when I was first adding fire tiles into the game, this pathfinding problem took a couple of days to actually properly solve. The main complication is the limiting factor of “maximum movement range” and how it interacts with both the cost to path through tiles and the “desire cost” used to avoid hazard tiles. You can see in that gif I posted how the path changes as you try to move farther and farther past the fire tile, eventually pathing around it would not be possible within 6 movement, so it has to path through it instead.

And there’s a lot of complication to it beyond the simple cases too:

(14 total movement vs 10 total movement on the same level)

There’s a sort of built in assumption in A* and Dijkstras that, if the shortest path from A to C passes through tile B, that it also takes the shortest path from A to B. So if you’ve found the shortest path to B already, you can just start there and continue on to C. Those algorithms rely on that to be efficient, because you can skip tiles that you’ve already found a shorter path to when pulling stuff of the priority queue, and when reconstructing the path at the end you can rely on this by having each tile store the tile it was reached from during the pathfinding algorithm

This is what I mean: This above situation does not happen with “standard” pathfinding! The best path from A to B does not line up with the best path from A to C, despite the path from A to C containing tile B. This uh, complicates things and breaks some assumptions that existing pathfinding relies on.

MY SOLUTION

So, its A* or Dijkstra with modified costs and heuristics right? Well… almost, but not quite. You can see the code for it below (along with some extra stuff for minimizing bends in the path as well). You’ll notice… it’s not exactly dijkstra or A*, and there’s a lot of stuff in there that is not obvious why its the way it is. In fact, I wrote this in 2020 and have since cleared it from my mind… it’s not even obvious to me anymore why stuff is the way it is. There was a lot of trial and error to get this to work, a lot of moving things around and doing slight modifications to the algorithm. In preparation for this blog post I double checked and tried to run it back and make this just modified A* again, but every change to simplify it just added in a bunch of pathfinding bugs. So its the way it is. And the train of thought that has led here has mostly been dumped from my mind, since it works already.

Why is the path stored inside the cells that get added to the priority queue, instead of the standard way A* does it where the nodes store which node they were reached from? Why does it check that the path is the best path when popping from the queue instead of when pushing to the queue? Both of these are decently large diversions from A*, but absolutely necessary for this to work apparently.

| struct advanced_weighted_cell { | |

| iVec2D cell; | |

| int cost; | |

| int desire; | |

| int bends = -1; | |

| temppodvector<char> current_path; | |

| int path_value() const { | |

| return cost*100+bends; | |

| } | |

| std::weak_ordering operator <=>(const advanced_weighted_cell& rhs) const { | |

| if(desire != rhs.desire) return desire <=> rhs.desire; | |

| if(cost != rhs.cost) return cost <=> rhs.cost; | |

| if(bends != rhs.bends) return bends <=> rhs.bends; | |

| return std::weak_ordering::equivalent; | |

| } | |

| }; | |

| podvector<iVec2D> TacticsGrid::advanced_pathfind(Character* source, iVec2D begin, iVec2D end, int max_range, bool sunken_only){ | |

| max_range *= pathfind_cost_resolution; | |

| podvector<iVec2D> path; | |

| dynamic_grid<int> pathvalues(width, depth); | |

| for(auto& t:pathvalues) t = INT_MAX; | |

| if(!Debug.CheckAssert(pathvalues.in_bounds(begin)) || !Debug.CheckAssert(pathvalues.in_bounds(end))) { | |

| return path; | |

| } | |

| auto costs = pathfind_costs(source, sunken_only); | |

| std::priority_queue<advanced_weighted_cell, std::vector<advanced_weighted_cell>, std::greater<advanced_weighted_cell>> queue; | |

| queue.push({begin, 0, 0, 0}); | |

| int total_checked = 0; | |

| while(!queue.empty()){ | |

| auto tile = queue.top(); | |

| queue.pop(); | |

| if(tile.path_value() <= pathvalues[tile.cell]){ | |

| pathvalues[tile.cell] = tile.path_value(); | |

| if(tile.cell == end) { | |

| iVec2D current = begin; | |

| for(auto i : tile.current_path){ | |

| current += all_orientations[i]; | |

| path.push_back(current); | |

| } | |

| //Debug.DisplayVariable(total_checked); | |

| return path; | |

| break; | |

| } | |

| for(int i = 0; i<4; i++){ | |

| auto &v = all_orientations[i]; | |

| iVec2D t2 = v+tile.cell; | |

| if(pathvalues.in_bounds(t2)){ | |

| advanced_weighted_cell next = {t2, | |

| tile.cost+costs[t2].enter_cost + costs[tile.cell].exit_cost, | |

| tile.desire+(t2==end?0:costs[t2].desire_cost),// + iso_dist(t2-end), //heuristic is more efficient, but results in paths with non-optimal numbers of bends | |

| tile.bends}; | |

| if(tile.current_path.size() > 0 && i != tile.current_path.back()) next.bends++; | |

| if(next.cost <= max_range && next.path_value() <= pathvalues[t2]){ | |

| next.current_path.resize(tile.current_path.size()+1); //minimize reallocations | |

| if(tile.current_path.size() > 0) { | |

| std::memcpy(next.current_path.data(), tile.current_path.data(), tile.current_path.size()*sizeof(char)); | |

| } | |

| next.current_path.back() = i; | |

| queue.push(next); | |

| total_checked++; | |

| } | |

| } | |

| } | |

| } | |

| } | |

| return path; | |

| } |

Anyway. That’s all the background info for the problem. You’re welcome to try solving it yourself if you want to get a real feel for just how not-trivial it is (or if you think that it really could just be A* with a modified heuristic instead) (and if you are going to try and “suggest” to me ways to improve it, then please actually implement and try it in context first, and know I won’t use it because what I have works fine already, has been *thoroughly* tested on thousands of different cases, and doesn’t have any performance issues either)

LET’S SEE HOW WELL CHATGPT DOES

Here’s the meat of this article. The actual experiment.

Can GPT-4 (via ChatGPT Plus) actually solve this problem?

I asked it

Write a function in code that solves the following problem:

An agent needs to find the best path on a 10x10 tile grid from their current location to a target location.

They have a limited movement range of 5 points

Regular tiles cost 1 point to move through, water tiles cost 2 points to move through.

Fire tiles cost 1 point to move through, but they should avoid pathing through them even if it means taking a longer path to their destination (provided the path is still within their limited movement range)

For the purposes of the experiment, I explicitly left out details of the algorithm, instead gave it a description of the *problem* that needs to be solved. This is how you start with a problem anyway, and I *am* actually curious if there’s maybe a more obvious or trivial solution I missed, so I’d rather not pollute it’s train of thought with implementation details. I also didn’t say what language to code in since I assumed it will work better if it picks python rather than asking it to write C++. I only care about the actual algorithm after all.

ChatGPT responded,

You can use the A* pathfinding algorithm to find the optimal path considering the costs and constraints. Here's a Python function that solves the problem:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters. Learn more about bidirectional Unicode characters

import heapq class Tile: def __init__(self, x, y, tile_type): self.x = x self.y = y self.tile_type = tile_type def cost(self): if self.tile_type == "water": return 2 elif self.tile_type == "fire": return 1 else: return 1 def is_fire(self): return self.tile_type == "fire" def heuristic(a, b): return abs(a.x - b.x) + abs(a.y - b.y) def a_star_search(grid, start, goal, max_movement): frontier = [] heapq.heappush(frontier, (0, start)) came_from = {start: None} cost_so_far = {start: 0} while frontier: current = heapq.heappop(frontier)[1] if current == goal: break for neighbor in get_neighbors(current, grid): new_cost = cost_so_far[current] + neighbor.cost() if new_cost <= max_movement and (neighbor not in cost_so_far or new_cost < cost_so_far[neighbor]) and not neighbor.is_fire(): cost_so_far[neighbor] = new_cost priority = new_cost + heuristic(goal, neighbor) heapq.heappush(frontier, (priority, neighbor)) came_from[neighbor] = current return came_from, cost_so_far def get_neighbors(tile, grid): neighbors = [] for dx, dy in ((-1, 0), (1, 0), (0, -1), (0, 1)): x, y = tile.x + dx, tile.y + dy if 0 <= x < len(grid) and 0 <= y < len(grid[0]): neighbors.append(grid[x][y]) return neighbors def reconstruct_path(came_from, start, goal): path = [goal] current = goal while current != start: current = came_from[current] path.append(current) return path[::-1] def find_best_path(grid, start, goal, max_movement): came_from, cost_so_far = a_star_search(grid, start, goal, max_movement) if goal not in came_from: return None return reconstruct_path(came_from, start, goal)

Which like, it *sorta* understood the problem, though instead of “avoiding” going through fire it just… never goes through fire ever.

So I ask

this code refuses to path through fire, it should still path through fire if it has to in order to reach its destination within its movement range

And it apologized and then fixed the a_star_search function like so

| def a_star_search(grid, start, goal, max_movement): | |

| frontier = [] | |

| heapq.heappush(frontier, (0, start)) | |

| came_from = {start: None} | |

| cost_so_far = {start: 0} | |

| while frontier: | |

| current = heapq.heappop(frontier)[1] | |

| if current == goal: | |

| break | |

| for neighbor in get_neighbors(current, grid): | |

| new_cost = cost_so_far[current] + neighbor.cost() + (1000 if neighbor.is_fire() else 0) | |

| if new_cost - (1000 if neighbor.is_fire() else 0) <= max_movement and (neighbor not in cost_so_far or new_cost < cost_so_far[neighbor]): | |

| cost_so_far[neighbor] = new_cost | |

| priority = new_cost + heuristic(goal, neighbor) | |

| heapq.heappush(frontier, (priority, neighbor)) | |

| came_from[neighbor] = current | |

| return came_from, cost_so_far |

Again, not quite. It made no distinction between the penalty cost for pathing through fire, and the physical cost for pathing through tiles, so it still wouldn’t path through fire (as the +1000 penalty makes fire tiles way out of your movement range).

Anyway I went back and forth with it a few more times, had it generate a test case, and fixed a runtime error that occurred when I actually tried to run it. Landed on this, and I customized the test case to something simple that would fail pretty obviously. It mixed up x and y in its test case stuff, but I didn’t want to argue with it over that so I just dealt with it (its pretty slow to re-generate)

| import heapq | |

| class Tile: | |

| def __init__(self, x, y, tile_type): | |

| self.x = x | |

| self.y = y | |

| self.tile_type = tile_type | |

| def cost(self): | |

| if self.tile_type == "water": | |

| return 2 | |

| elif self.tile_type == "fire": | |

| return 1 | |

| else: | |

| return 1 | |

| def is_fire(self): | |

| return self.tile_type == "fire" | |

| def heuristic(a, b): | |

| return abs(a.x - b.x) + abs(a.y - b.y) | |

| def a_star_search(grid, start, goal, max_movement): | |

| frontier = [] | |

| heapq.heappush(frontier, (0, 0, start)) | |

| came_from = {start: None} | |

| cost_so_far = {start: 0} | |

| counter = 1 | |

| while frontier: | |

| _, _, current = heapq.heappop(frontier) | |

| if current == goal: | |

| break | |

| for neighbor in get_neighbors(current, grid): | |

| new_cost = cost_so_far[current] + neighbor.cost() | |

| if new_cost <= max_movement and (neighbor not in cost_so_far or new_cost < cost_so_far[neighbor]): | |

| cost_so_far[neighbor] = new_cost | |

| priority = new_cost + heuristic(goal, neighbor) + (1000 if neighbor.is_fire() and not (current.is_fire() and neighbor == goal) else 0) | |

| heapq.heappush(frontier, (priority, counter, neighbor)) | |

| came_from[neighbor] = current | |

| counter += 1 | |

| return came_from, cost_so_far | |

| def get_neighbors(tile, grid): | |

| neighbors = [] | |

| for dx, dy in ((-1, 0), (1, 0), (0, -1), (0, 1)): | |

| x, y = tile.x + dx, tile.y + dy | |

| if 0 <= x < len(grid) and 0 <= y < len(grid[0]): | |

| neighbors.append(grid[x][y]) | |

| return neighbors | |

| def reconstruct_path(came_from, start, goal): | |

| path = [goal] | |

| current = goal | |

| while current != start: | |

| current = came_from[current] | |

| path.append(current) | |

| return path[::-1] | |

| def find_best_path(grid, start, goal, max_movement): | |

| came_from, cost_so_far = a_star_search(grid, start, goal, max_movement) | |

| if goal not in came_from: | |

| return None | |

| return reconstruct_path(came_from, start, goal) | |

| def create_tile_grid(letter_grid): | |

| tile_grid = [] | |

| for x, row in enumerate(letter_grid): | |

| tile_row = [] | |

| for y, tile_type in enumerate(row): | |

| if tile_type == "R": | |

| tile_row.append(Tile(x, y, "regular")) | |

| elif tile_type == "W": | |

| tile_row.append(Tile(x, y, "water")) | |

| elif tile_type == "F": | |

| tile_row.append(Tile(x, y, "fire")) | |

| tile_grid.append(tile_row) | |

| return tile_grid | |

| def test_case(letter_grid, start_coords, goal_coords, max_movement): | |

| grid = create_tile_grid(letter_grid) | |

| start = grid[start_coords[0]][start_coords[1]] | |

| goal = grid[goal_coords[0]][goal_coords[1]] | |

| path = find_best_path(grid, start, goal, max_movement) | |

| if path: | |

| print("Path found:") | |

| for tile in path: | |

| print(f"({tile.x}, {tile.y}) -> {tile.tile_type}") | |

| else: | |

| print("No path found within the movement range.") | |

| letter_grid = [ | |

| ["R", "F", "F", "F", "R", "R", "R", "R", "R", "R"], | |

| ["R", "R", "R", "R", "R", "R", "R", "R", "R", "R"], | |

| ["R", "R", "R", "R", "R", "R", "R", "R", "R", "R"], | |

| ["R", "R", "R", "R", "R", "R", "R", "R", "R", "R"], | |

| ["R", "R", "R", "R", "R", "R", "R", "R", "R", "R"], | |

| ["R", "R", "R", "R", "R", "R", "R", "R", "R", "R"], | |

| ["R", "R", "R", "R", "R", "R", "R", "R", "R", "R"], | |

| ["R", "R", "R", "R", "R", "R", "R", "R", "R", "R"], | |

| ["R", "R", "R", "R", "R", "R", "R", "R", "R", "R"], | |

| ["R", "R", "R", "R", "R", "R", "R", "R", "R", "R"], | |

| ] | |

| start_coords = (0, 0) | |

| goal_coords = (0, 3) | |

| max_movement = 20 | |

| test_case(letter_grid, start_coords, goal_coords, max_movement) |

On the test case given, because it ends on a fire tile it just ignores ever trying to avoid the fire tiles.

I asked it to fix it, and it gave me a solution that went back to just failing to pathfind through fire tiles at all.

I asked it to fix that, and it went in a circle back to the one it had before.

After going in circles a few more times, I decided that was it. It got *close*. It seemed to understand the problem, but it could not actually properly solve it.

Would this have helped me back in 2020? Probably not. I tried to take its solution and use my mushy human brain to modify it into something that actually worked, but the path it was going down was not quite correct, so there was no salvaging it. Again, the modifications from basic A* to the actual solution are not obvious, so starting from a base of “its just A*” isn’t really that much of a help. Moreover, GPT doesn’t even really recognize that this problem is harder than just “modifying A*”, an insight that would have saved me time when I originally wrote this algorithm.

Anyway, perhaps this is not a problem suited towards GPT. After all, A* is an extremely common algorithm, one which it certainly has thousands or millions of examples of in its training data, so it may be restricted from deviating too far from that, no matter how much you try to push it and help it.

OTHER ATTEMPTS

I tried this again with a couple of other “difficult” algorithms I’ve written, and its the same thing pretty much every time. It will often just propose solutions to similar problems and miss the subtleties that make your problem different, and after a few revisions it will often just fall apart.

In the case of this one (this is how Mew resolves Knockback) it kind of just missed *all* the details of the problem, like that it needs to not let 2 moving objects move onto the same time. This one was particularly bad, in that when I accidentally asked GPT 3.5 instead of GPT 4 it got *much* closer to a real solution than GPT 4 did.

This.. isn’t even close.

When I asked GPT 3.5 accidentally it got much much closer. This is actually a “working solution, but with some bugs and edge cases”. It can’t handle a cycle of objects moving onto each other in a chain, but yeah this is much better than the absolutely nothing GPT4 gave… odd…

Its possible that similar problems to that have shown up in its training set.

It’s hard to think of truly unique problems that it definitely has not seen anything similar to before, at least ones that can be described in a paragraph or two. Lets try a weird constructed example. Let’s have it create a collision detection algorithm between… crescent moon shapes. (I couldn’t find an algorithm for this from a quick google, and it seems somewhat non-trivial, so yeah let’s try this)

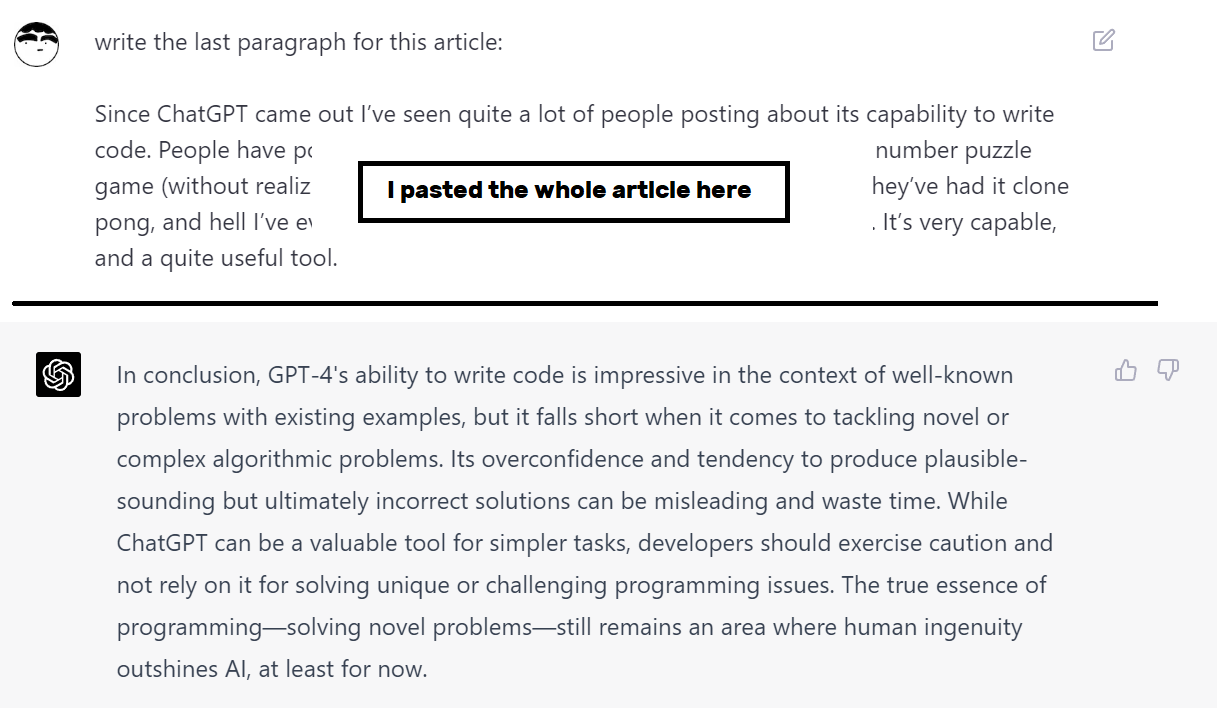

And then it coded that algorithm. To ChatGPT’s credit, it’s not immediately obvious why it’s wrong if you try to think about it. But it ain’t too hard to find a counter example.

I asked it to try again

Again, its actually really hard to find counter examples, but here’s one that fails 2.a (outer circles collide, each inner circle collides with the other’s outer circle but not with each other, yet the crescents are not colliding):

I think ChatGPT is just kind of bullshitting at this point. It doesn’t have an answer, and cannot think of one, so it’s just making shit up at this point. And like… its really good at making shit up though! Like that algorithm… the cases where it fails are subtle! You could easily publish that algorithm in a book and confuse people into thinking they somehow messed up the implementation when there’s bugs in the collision detection, because it certainly *sounds* like the type of algorithm that would solve this.

SO, CAN GPT-4 ACTUALLY WRITE CODE?

Given a description of an algorithm or a description of a well known problem with plenty of existing examples on the web, yeah GPT-4 can absolutely write code. It’s mostly just assembling and remixing stuff it’s seen, but TO BE FAIR… a lot of programming is just that.

However, it absolutely fumbles when trying to *solve actual problems*. The type of novel problems that haven’t been solved before that you may encounter while programming. Moreover, it loves to “guess”, and those guesses can waste a lot of time if it sends you down the wrong path towards solving a problem.

The crescent example is a bit damning here. ChatGPT doesn’t know the answer, there was no example for this in its training set and it can’t find that in it’s model. The useful thing to do would be to just say “I do not know of an algorithm that does this.” But instead it’s overconfident in its own capabilities, and just makes shit up. It’s the same problem it has with plenty of other fields, though it’s strange competence in writing simple code sorta hides that fact a bit.

Anyway, in its own words,

Awesome article!

I've read around various reports where ChatGPT's actual logical thinking and problem-solving performance greatly increases when instructed to "think step by step and explain your reasoning", "describe your approach in great detail before tackling the problem" and such. Maybe that would allow the user to "inject" a step it missed in its reasoning.

I think it would be very interesting to prime it in such a way, correcting and adjusting its "train of thought" (so to speak) and maybe ask for a pseudocode implementation of the algorithm, before it even attempted to write a single line of actual code. I have no idea if it would matter, but from what I can recollect I've had better/more appropriate results when I tried to allow it for more prior planning instead of asking for code right away. Specifically, I noticed it tends to end in those "wrong solutions loop" (I've had those too) much less often. That was on ChatGPT3.5 though, I've no access to v4 ATM.

Perhaps I'm missing a detail, but why is the pathfinding problem here not a textbook variation on Dijkstra? To quote WP: https://en.wikipedia.org/wiki/Dijkstra%27s_algorithm#Related_problems_and_algorithms

> The functionality of Dijkstra's original algorithm can be extended with a variety of modifications. For example, sometimes it is desirable to present solutions which are less than mathematically optimal. To obtain a ranked list of less-than-optimal solutions, the optimal solution is first calculated. A single edge appearing in the optimal solution is removed from the graph, and the optimum solution to this new graph is calculated. Each edge of the original solution is suppressed in turn and a new shortest-path calculated. The secondary solutions are then ranked and presented after the first optimal solution.

This would seem to cover your fire example and your different-requested-ranges example. You do Dijkstra to obtain the feasible path (taking into account movement penalties like water); then you calculate the next less optimal as described; you calculate the total damage of the feasible paths, sort by least damage to find the safest but still feasible path to show the player, and there you go - you get the safest feasible path first, then a modest amount of damage, then more damage, etc. This also covers the tradeoff between range and damage: if you target a nearby tile, then there will almost certainly be a safe feasible path, and an intermediate range may be able to avoid most damage, while if you target one at the maximum range, then you will just have to incur whatever damage happens along the way because that will be the only feasible path. This also naturally handles differently weighted damage if you have more hazards than just fire. Plus, it sounds much more straightforward and debuggable than whatever it is you have because it's factorized into first feasible movement, and then preference over movements, so you can see where any bugs come from: did the movement planning erroneously think a path was impossible, or did the preference misrank it?

> or if you think that it really could just be A* with a modified heuristic instead

I don't think that's equivalent to A* with a modified heuristic.